1. Basic Renderer

A renderer is a collection of algorithms that take a Scene data

structure as its input and produces a FrameBuffer data structure

as its output.

Renderer

+--------------+

Scene | | FrameBuffer

data ====> | Rendering | ====> data

structure | algorithms | structure

| |

+--------------+

A Scene data structure contains information that describes a "virtual

scene" that we want to take a "picture" of. The renderer is kind of like

a digital camera and the FrameBuffer is the camera's film. The renderer

takes (calculates) a picture of the Scene and stores the picture in the

FrameBuffer. The FrameBuffer holds the pixel information that describes

the picture of the virtual scene.

The rendering algorithms can be implemented in hardware (a graphics card or a GPU) or in software. In this class we will write a software renderer using the Java programming language.

Our software renderer is made up of four "packages" of Java classes. Each package is contained in its own directory. The name of the directory is the name of the package.

The first package is the collection of input data structures. This is called

the scene package. The data structure files in the scene package are:

- Scene.java

- Camera.java

- Position.java

- Vector.java

- Model.java

- Primitive.java

- LineSegment.java

- Point.java

- Vertex.java

The Primitive, LineSegment, and Point classes are in a sub-package

called primitives.

The second package is the output data structure. It is called the

framebuffer package and contains the file

- FrameBuffer.java.

The third package is a collection of algorithms that manipulate the

data structures from the other two packages. This package is called

the pipeline package. The algorithm files are:

- Pipeline.java

- Model2Camera.java

- Projection.java

- Viewport.java

- Rasterize.java

- Rasterize_Clip_AntiAlias_Line.java

- Rasterize_Clip_Point.java

The fourth package is a library of geometric models. This package is

called the models_L package. It contains a number of files for geometric

shapes such as sphere, cylinder, cube, cone, pyramid, tetrahedron,

dodecahedron, and mathematical curves and surfaces.

There is also a fifth package, a collection of client programs that use

the renderer. These files are in a folder called clients_r1.

Here is a brief description of the data structures from the scene and

framebuffer packages.

-

A

FrameBufferobject represents a two-dimensional array of pixel data. Pixel data represents the red, green, and blue colors of each pixel in the image that the renderer produces. TheFrameBufferalso defines a two-dimensional sub-array of pixel data called aViewport. -

A

Sceneobject has aCameraobject and aListofPositionobjects. -

A

Cameraobject has a boolean which determines if the camera is a perspective camera or an orthographic camera. -

A

Positionobject has aVectorobject and aModelobject. -

A

Vectorobject has three doubles, the x, y, z coordinates of a vector in 3-dimensional space. AVectorrepresents a location in 3-dimensional space, the location of theModelthat is in aPositionwith theVector. -

A

Modelobject has aListofVertexobjects, aLIstofColorobjects, and aListofPrimitiveobjects. -

A

Vertexobject has three doubles, the x, y, z coordinates of a point in 3-dimensional space. -

A

Colorobject represents the red, green, can blue components of a color. We will use Java's built inColorclass. -

A

Primitiveobject is either aLineSegmentobject or aPointobject. -

A

LineSegmentobject has two lists of two integers each. The two integers in the firstListare indices into theModel'sListof vertices. This lets aLineSegmentobject represent the two endpoints of a line segment in 3-dimensional space. The two integers in the secondListare indices into theModel'sListof colors, one color for each endpoint of the line segment. -

A

Pointobject has three integer values. The first integer is an index into theModel'sListof vertices. This lets aPointobject represent a single point in 3-dimensional space. The second integer is an index into theModel'sListof colors. The third integer is the "diameter" of the point, which lets thePointbe visually represented by a block of pixels.

1.1 Scene tree data structure

When we put all of the above information together, we see that

a Scene object is the root of a tree data structure.

Scene

/ \

/ \

Camera List<Position>

/ | \

/ | \

Position Position Position

/ \ / \ / \

/ \

/ \

Vector Model

/ | \ / | \

x y z /---/ | \---\

/ | \

/ | \

List<Vertex> List<Color> List<Primitive>

/ | \ / | \ / | \

| | |

Vertex Color LineSegment

/ | \ / | \ / \

x y z r g b / \

List<Integer> List<Integer>

(vertices) (colors)

/ \ / \

/ \ / \

Integer Integer Integer Integer

In the renderer.scene.util package there is a file called DrawSceneGraph.java

that can create image files containing pictures of a scene's tree data

structure. The pictures of the tree data structures are actually created

by a program called GraphViz. If you want the renderer to be able to draw

these pictures, then you need to install GraphViz on your computer.

https://en.wikipedia.org/wiki/Scene_graph

1.2 Renderer source code

The Java source code to this renderer is publicly available as a zip file.

Here is a link to the renderer's source code.

http://cs.pnw.edu/~rlkraft/cs45500/for-class/renderer_1.zip

Download and unzip the source code to any convenient location in your computer's file system. The renderer does not have any dependencies other than the Java 11 (or later) JDK (Java Development Kit). So once you have downloaded and unzipped the distribution, you are ready to compile the renderer and run the renderer's example programs.

The following sections of this document describe the organization of the renderer packages and provide instructions for building the renderer and its client programs.

https://openjdk.org/projects/jdk/11/

2. Packages, imports, classpath

Before we go into the details of each renderer package, let us review some of the details of how the Java programming language uses packages.

But first, let us review some of the details of how Java classes are defined and how the Java compiler compiles them.

A Java class is defined in a text file with the same name as the class and with the filename extension ".java". When the compiler compiles the class definition, it produces a binary (machine readable) version of the class and puts the binary code in a file with the same name as the class but with the file name extension ".class".

Every Java class will make references to other Java classes. For example,

here is a simple Java class called SimpleClass that should be stored in

a text file called SimpleClass.java.

import java.util.Scanner;

public class SimpleClass {

public static void main(String[] args) {

final Scanner in = new Scanner(System.in);

final int n = in.nextInt();

System.out.println(n);

}

}

This class refers to the Scanner class, the String class, the System

class, the InputStream class (why?), the PrintStream class (why?), and, in

fact, many other classes. When you compile the source file SimpleClass.java,

the compiler produces the binary file SimpleClass.class. As the compiler

compiles SimpleClass.java, the compiler checks for the existence of all

the classes referred to by SimpleClass.java. For example, while compiling

SimpleClass.java the compiler looks for the file Scanner.class. If it

finds it, the compiler continues with compiling SimpleClass.java (after

the compiler makes sure that your use of Scanner is consistent with the

definition of the Scanner class). But if Scanner.class is not found, then

the compiler looks for the text file Scanner.java. If the compiler finds

Scanner.java, the compiler compiles it to produce Scanner.class, and then

continues with compiling SimpleClass.java. If the compiler cannot find

Scanner.java, then you get a compiler error from compiling SimpleClass.java.

The same goes for all the other classes referred to by SimpleClass.java.

Here is an important question. When the compiler sees, in the compiling of

SimpleClass.java, a reference to the Scanner class, how does the compiler

know where it should look for the files Scanner.class or Scanner.java?

These files could be anywhere in your computer's file system. Should the

compiler search your computer's entire storage drive for the Scanner

class? The answer is no, for two reasons (one kind of obvious and one kind

of subtle). The obvious reason is that the computer's storage drive is very

large and searching it is time consuming. If the compiler has to search your

entire drive for every class reference, it will take way too long to compile

a Java program. The subtle reason is that it is common for computer systems

to have multiple versions of Java stored in the file system. If the compiler

searched the whole storage drive for classes, it might find classes from

different versions of Java and then try to use them together, which does

not work. All the class files must come from the same version of Java.

The compiler needs help in finding Java classes so that it only looks in certain controlled places in the computer's file system and so that it does not choose classes from different versions of Java.

The import statements at the beginning of a Java source file are part of the solution to helping the compiler find class definitions.

An import statement tells the Java compiler how to find a class

definition. In SimpleClass.java, the import statement

import java.util.Scanner;

tells the compiler to find a folder named java and then within that folder

find a folder named util and then within that folder find a class file

named Scanner.class (or a source file named Scanner.java).

The folders in an import statement are called packages. In Java, a package is a folder in your computer's file system that contains a collection of Java class files or Java source files. The purpose of a package is to organize Java classes. In a large software project there will always be many classes. Having all the classes from a project (maybe thousands of them) in one folder would make understanding the project's structure and organization difficult. Combining related classes into a folder helps make the project's structure clearer.

The import statement

import java.util.Scanner;

tells us (and the compiler) that Java has a package named java and a

sub-package named java.util. The Scanner class is in the package

java.util (notice that the package name is java.util, not util).

Look at the Javadoc for the Scanner class.

https://docs.oracle.com/en/java/javase/21/docs/api/java.base/java/util/Scanner.html

The very beginning of the documentation page tells us the package that this class is in.

What about the class String? Where does the compiler look for the String

class? Notice that there is no import statement for the String class. Look

at the Javadoc for the String class.

https://docs.oracle.com/en/java/javase/21/docs/api/java.base/java/lang/String.html

The String class is in a package named java.lang. The java.lang package

is automatically imported for us by the Java compiler. This package contains

classes that are so basic to the Java language the all Java programs will

need them, so these classes are all placed in one package and that package

gets automatically imported by the Java compiler.

We still haven't fully explained how the Java compiler finds the Scanner

class. The import statement

import java.util.Scanner;

tells the compiler to find a folder called java and the Scanner class

will be somewhere inside that folder. But where does the compiler find

the java folder? Should it search your computer's entire file system

for a folder called java? Obviously not, but we seem to be right back

to the problem that we started with. Where does the compiler look in your

computer's file system? The answer is another piece of the Java system,

something called the "classpath".

The classpath is a list of folder names that the compiler starts its search from when it searches for a package. A classpath is written as a string of folder names separated by semicolons (or colons on a Linux computer). A Windows classpath might look like this.

C:\myProject;C:\yourLibrary\utils;D:\important\classes

This classpath has three folder names in its list. A Linux classpath might look like this.

/myProject:/yourLibrary/utils:/important/classes

When you compile a Java source file, you can specify a classpath on the compiler command-line.

> javac -cp C:\myProject;C:\yourLibrary\utils;D:\important\classes MyProgram.java

The Java compiler will only look for packages that are subfolders of the folders listed in the classpath.

The Java compiler has some default folders that it always uses as part of

the classpath, even if you do not specify a value for the classpath. The

JDK that you install on your computer is always part of the compiler's

classpath. So Java packages like java.lang and java.util (and many

other packages), which are part of the JDK, are always in the compiler's

classpath.

If you do not specify a classpath, then the compiler's default classpath will include the directory containing the file being compiled (the current directory). However, if you DO specify a classpath, then the compiler will NOT automatically look in the current directory. Usually, when someone gives the compiler a classpath, they explicitly include the "current directory" in the classpath list. In a classpath, the name you use for the "current directory" is a single period, ".". So a classpath that explicitly includes the current directory might look like this.

> javac -cp .;C:\myProject;C:\yourLibrary\utils;D:\important\classes MyProgram.java

You can put the . anywhere in the classpath, but most people put it at the

beginning of the classpath to make it easier to read. A common mistake is to

specify a classpath but forget to include the current directory in it.

Here is an example of an import statement from our renderer.

import renderer.scene.util.DrawSceneGraph;

This import statement says that there is a folder named renderer with

a subfolder named scene with a subfolder named util that contains a

file named DrawSceneGraph.java (or DrawSceneGraph.class). The file

DrawSceneGraph.java begins with a line of code called a package

statement.

package renderer.scene.util;

A package statement must come before any import statements.

A class file contains a "package statement" declaring where that class file should be located. Any Java program that wants to use that class (a "client" of that class) should include an "import statement" that matches the "package statement" from the class. When the client is compiled, we need to give the compiler a "classpath" that tells the compiler where to find the folders named in the import statements.

A Java class is not required to have a package statement. A class without a package statement becomes part of a special package called the unnamed package. The unnamed package is always automatically imported by the compiler. The unnamed package is used mostly for simple test programs or simple programs demonstrating an idea, or examples programs in introductory programming courses. The unnamed package is never used for library classes or classes that need to be shared as part of a large project.

A Java class file is not required to have any import statements. You can

use any class you want without having to import it. But if you use a class

without importing it, then you must always use the full package name

for the class. Here is an example. If we import the Scanner class,

import java.util.Scanner;

The we can use the Scanner class like this.

final Scanner in = new Scanner(System.in);

But if we do not import the Scanner class, then we can still use it,

but we must always refer to it by its full package name, like this.

final java.util.Scanner in = new java.util.Scanner(System.in);

If you are using a class in many places in your code, then you should import it. But if you are referring to a class in just a single place in your code, then you might choose to not import it and instead use the full package name for the class.

We can import Java classes using the wildcard notation. The following

import statement imports all the classes in the java.util package,

including the Scanner class.

import java.util.*;

There are advantages and disadvantages to using wildcard imports. One

advantage is brevity. If you are using four classes from the java.util

package, then you need only one wildcard import instead of four fully

qualified imports.

One disadvantage is that wildcard imports can lead to name conflicts.

The following program will not compile because both the java.util and

the java.awt packages contain a class called List. And both the

java.util and the java.sql packages contain a class called Date.

import java.util.*; // This package contains a List and a Date class.

import java.awt.*; // This package contains a List class.

import java.sql.*; // This package contains a Date class.

public class Problem {

public static void main(String[] args) {

List list = null; // Which List class?

Date date = null; // Which Date class?

}

}

We can solve this problem by combining a wildcard import with a qualified import.

import java.util.*; // This package contains a List and a Data class.

import java.awt.*; // This package contains a List class.

import java.sql.*; // This package contains a Date class.

import java.awt.List;

import java.sql.Date;

public class ProblemSolved {

public static void main(String[] args) {

List list = null; // From java.awt package.

Date date = null; // From java.sql package.

}

}

You can try compiling these last two examples with the Java Visualizer.

https://cscircles.cemc.uwaterloo.ca/java_visualize/

If you want to see more examples of using packages and classpaths, look at the code in the follow zip file.

http://cs.pnw.edu/~rlkraft/cs45500/for-class/package-examples.zip

There is more to learn about how the Java compiler finds and compiles Java classes. For example, we have not yet said anything about jar files. Later we will see how, and why, we use jar files.

https://dev.java/learn/packages/

https://docs.oracle.com/javase/tutorial/java/package/index.html https://docs.oracle.com/javase/tutorial/java/package/QandE/packages-questions.html https://docs.oracle.com/javase/tutorial/deployment/jar/basicsindex.html

https://en.wikipedia.org/wiki/Classpath

https://docs.oracle.com/javase/specs/jls/se17/html/jls-7.html#jls-7.4.2 https://docs.oracle.com/javase/8/docs/technotes/tools/findingclasses.html

https://en.wikipedia.org/wiki/JAR_(file_format) https://dev.java/learn/jvm/tools/core/jar/

3. Build System

Any project as large as this renderer will need some kind of "build system".

https://en.wikipedia.org/wiki/Build_system_(software_development)

The renderer has over 100 Java source files. To "build" the renderer we need to produce a number of different "artifacts" such as class files, HTML Javadoc files, jar files. We do not want to open every one of the 100 or so Java source code files and compile each one. We need a system that can automatically go through all the sub folders of the renderer and compile every Java source file to a class file, produce the Javadoc HTML files, and then bundle the results into jar files.

Most Java projects use a build system like Maven, Gradle, Ant, or Make.

In this course we will use a much simpler build system consisting of

command-line script files (cmd files on Windows and bash files on

Linux). We will take basic Java command-lines and place them in the

script files. Then by running just a couple of script files, we can

build all the artifacts we need.

We will write script files for compiling all the Java source files (using

the javac command), creating all the Javadoc HTML files (using the javadoc

command), running individual client programs (using the java command), and

bundling the renderer library into jar files (using the jar command). We

will also write script files to automatically "clean up" the renderer folders

by deleting all the artifacts that the build scripts generate.

Here are help pages for the command-line tools that we will use.

- https://dev.java/learn/jvm/tools/core/

- https://dev.java/learn/jvm/tools/core/javac/

- https://dev.java/learn/jvm/tools/core/java/

- https://dev.java/learn/jvm/tools/core/javadoc/

- https://dev.java/learn/jvm/tools/core/jar/

Be sure to look at the contents of all the script files. Most are fairly simple. Understanding them will help you understand the more general build systems used in industry.

There are two main advantages of using such a simple build system.

- No need to install any new software (we use Java's built-in tools).

- It exposes all of its inner workings (nothing is hidden or obscured).

Here are the well known build systems used for large projects.

- https://maven.apache.org/

- https://gradle.org/

- https://ant.apache.org/

- https://www.gnu.org/software/make/

Here is some documentation on the Windows cmd command-line language

and the Linux bash command-line language.

- https://linuxcommand.org/lc3_learning_the_shell.php

- https://learn.microsoft.com/en-us/windows-server/administration/windows-commands/windows-commands

- https://ss64.com/nt/syntax.html

3.1 Building class files

Here is the command line that compiles all the Java files in the scene

package.

> javac -g -Xlint -Xdiags:verbose renderer/scene/*.java

This command-line uses the Java compiler command, javac. The javac

command, like almost all command-line programs, takes command-line arguments

(think of "command-line programs" as functions and "command-line arguments"

as the function's parameters). The -g is the command-line argument that

tells the compiler to produce debugging information so that we can debug

the renderer's code with a visual debugger. The -Xlint is the command-line

argument that tells the compiler to produce all possible warning messages

(not just error messages). The -Xdiags:verbose command-line argument tells

the compiler to put as much information as it can into each error or warning

message. The final command-line argument is the source file to compile. In

this command-line we use file name globbing to compile all the .java

files in the scene folder.

There are a large number of command-line arguments that we can use with the

javac command. All the command-line arguments are documented in the help

page for the javac command.

https://docs.oracle.com/en/java/javase/25/docs/specs/man/javac.html

https://stackoverflow.com/questions/30229465/what-is-file-globbing

https://en.wikipedia.org/wiki/Glob_(programming)

https://ss64.com/nt/syntax-wildcards.html

The script file build_all_classes.cmd contains a command-line like the

above one for each package in the renderer. Executing that script file

compiles the whole renderer, one package at a time.

Two consecutive lines from build_all_classes.cmd look like this.

javac -g -Xlint -Xdiags:verbose renderer/scene/*.java &&^

javac -g -Xlint -Xdiags:verbose renderer/scene/primitives/*.java &&^

The special character ^ at the end of a line tells the Windows operating

system that the current line and the next line are to be considered as one

single (long) command-line. The operator && tells the Windows operating

system to execute the command on its left "and" the command on its right.

But just like the Java "and" operator, this operator is short-circuted.

If the command on the left fails (if it is "false"), then do not execute

the command on the right. The effect of this is to halt the compilation

process as soon as there is a compilation error. Without the &&^ at

the end of each line, the build_all_classes.cmd script would continue

compiling source files even after one of them failed to compile, and

probably generate an extraordinary number of error messages. By stopping

the compilation process at the first error, it becomes easier to see which

file your errors are coming from and prevent spurious false compilation

errors.

The script files in the clients_r1 folder are a bit different. For example,

the script file build_all_clients.cmd contains the following command-line.

javac -g -Xlint -Xdiags:verbose -cp .. *.java

Since the renderer package is in the directory above the clients_r1

folder, this javac command needs a classpath. The .. sets the

classpath to the directory above the current directory (where the

renderer package is).

The script file build_&_run_client.cmd lets us build and run a single

client program (a client program must have a static main() method which

defines the client as a runnable program). This script file is different

because it takes a command-line argument which is the name of the client

program that we want to compile and run. The script file looks like this.

javac -g -Xlint -Xdiags:verbose -cp .. %1

java -cp .;.. %~n1

Both the javac and the java commands need a classpath with .. in it

because the renderer package is in the folder above the current folder,

clients_r1. The java command also needs . in its classpath because

the class we want to run is in the current directory. The %1 in the

javac command represents the script file's command-line argument (the

Java source file to compile). The %~n1 in the java represents the name

from the command-line argument with its file name extension removed. If

%1 is, for example, ThreeDimensionalScene_R1.java, then %~n1 is that

file's basename, ThreeDimensionalScene_R1. The command-line

> build_&_run_client.cmd ThreeDimensionalScene_R1.java

will compile and then run the ThreeDimensionalScene_R1.java client program.

You can also use your mouse to "drag and drop" the Java file

ThreeDimensionalScene_R1.java

onto the script file

build_&_run_client.cmd.

Be sure you try doing this to make sure that the build system works

on your computer.

3.2 Documentation systems and Javadoc

Any project that is meant to be used by other programmers will need documentation of how the project is organized and how its code is supposed to be used. All modern programming languages come with a built-in system for producing documentation directly from the project's source code. The Java language uses a documentation system called Javadoc.

Javadoc is a system for converting your Java source code files into HTML

documentation pages. As you are writing your Java code, you add special

comments to the code and these comments become the source for the Javadoc

web pages. The Java system comes with a special compiler, the javadoc

command, that compiles the Javadoc comments from your source files into

web pages. Most projects make their Javadoc web pages publicly available

using a web server (many projects use GitHub for this).

Here is the entry page to the Javadocs for the Java API.

https://docs.oracle.com/en/java/javase/21/docs/api/java.base/module-summary.html

Here is the Javadoc page for the java.lang.String class.

https://docs.oracle.com/en/java/javase/21/docs/api/java.base/java/lang/String.html

Compare it with the source code in the String.java file.

https://github.com/openjdk/jdk21/blob/master/src/java.base/share/classes/java/lang/String.java

In particular, look at the Javadoc for the subString() method,

and compare it with the method's source code.

https://github.com/openjdk/jdk21/blob/master/src/java.base/share/classes/java/lang/String.java#L2806

Here is some documentation about the Javadoc documentation system.

- https://books.trinket.io/thinkjava2/appendix-b.html

- https://dev.java/learn/jvm/tools/core/javadoc/

- https://www.oracle.com/technical-resources/articles/java/javadoc-tool.html

- https://link.springer.com/content/pdf/bbm:978-1-4842-7307-4/1#page=12

Here are what documentation systems look like for several modern programming languages.

- The JavaScript language use a system called JSDoc, https://jsdoc.app

- The TypeScript language uses a system called TSDoc, https://tsdoc.org

- The Python language uses a system called Sphinx, https://www.sphinx-doc.org/en/master/

- The Rust language uses a system called rustdoc, https://doc.rust-lang.org/rustdoc/

- The Haskell language uses a system called Haddock, https://haskell-haddock.readthedocs.io

3.3 Building the Javadoc files

The script file build_all_Javadocs.cmd uses the javadoc command to

create a folder called html and fill it with the Javadoc HTML files

for the whole renderer. The javadoc command is fairly complex since

it has many options and it has to list all the renderer's packages

on a single command-line.

javadoc -d html -Xdoclint:all,-missing -link https://docs.oracle.com/en/java/javase/21/docs/api/ -linksource -quiet -nohelp -nosince -nodeprecatedlist -nodeprecated -version -author -overview renderer/overview.html -tag param -tag return -tag throws renderer.scene renderer.scene.primitives renderer.scene.util renderer.models_L renderer.models_L.turtlegraphics renderer.pipeline renderer.framebuffer

You should use the javadoc command's help page to look up each command-line

argument used in this command and see what its purpose is. For example, what

is the meaning of the -Xdoclint:all,-missing argument? What about the -d

argument?

After the Javadoc files are created, open the html folder and double

click on the file index.html. That will open the Javadoc entry page

in your browser.

https://docs.oracle.com/en/java/javase/25/docs/specs/man/javadoc.html

3.4 Jar files

Jar files are an efficient way to make large Java projects available to other programmers.

If we want to share the renderer project with someone, we could just give them all the folders containing the source code and then they could build the class files and the Javadocs for themselves. But for someone who just wants to use the library, and is not interested in how it is written, this is a bit cumbersome. What they want is not a "source code distribution" of the project. They want a "binary distribution" that has already been built. But they do not want multiple folders containing lots of packages and class files. That is still too cumbersome. They would like to have just a single file that encapsulates the entire project. That is what a jar file is.

A jar file (a "java archive") is a file that contains all the class

files from a project. A jar file is really a zip file. That is how it can

be a single file that (efficiently) contains a large number of files. If

you double click on the script file build_all_classes.cmd and then double

click on build_jar_files.cmd, that will create the file renderer_1.jar.

Try changing the ".jar" extension to a ".zip" extension. Then you can open

the file as a zip file and see all the class files that are in it.

The script file build_jar_files.cmd uses the jar command to build two

jar files, one containing the renderer's compiled binary class files and

the other containing the renderer's source code files.

Here is the command-line that builds the renderer_1.jar file. The jar

command, like the javadoc command, is long because it needs to list all

the renderer packages on a single command-line. This command-line assumes

that you have already build all of the renderer's class files (notice the

use of "file name globbing").

jar cvf renderer_1.jar renderer/scene/*.class renderer/scene/primitives/*.class renderer/scene/util/*.class renderer/models_L/*.class renderer/models_L/turtlegraphics/*.class renderer/pipeline/*.class renderer/framebuffer/*.class

Use the jar command's help page to look up each command-line argument used

in this command. For example, what is the meaning of cvf? (That command-line

argument is actually three options to the jar command.)

https://docs.oracle.com/en/java/javase/25/docs/specs/man/jar.html

https://docs.oracle.com/javase/tutorial/deployment/jar/basicsindex.html

https://en.wikipedia.org/wiki/JAR_(file_format)

The way the jar command handles options may seem a bit strange. The Java

jar command is actually based on the very famous Linux/Unix tar command

(the "tape archive" command). The way the options are processed is explained

in the tar man-page.

https://man7.org/linux/man-pages/man1/tar.1.html#DESCRIPTION

3.5 Jar files and the classpath

When you include a folder in Java's classpath, the Java compiler, or Java Virtual Machine, will find any class files that you put in that folder. But, a bit surprisingly, the compiler and the JVM will ignore any jar files in that folder. If you want the compiler, or the JVM, to find class files that are inside of a jar file, then you need to explicitly add the jar file to the classpath.

Earlier we define the classpath as a list of folder names. Now we can say that the classpath is a list of folder names and jar file names.

Let's consider an example of using a jar file. Use the script file

build_jar_files.cmd

to build the renderer_1.jar file. Then create a folder called jar-example

(anywhere in your computer's file system) and place into that folder the

renderer_1.jar file and the ThreeDimensionalScene_R1.java file from

this renderer's clients_r1 folder.

\---jar-example

| renderer_1.jar

| ThreeDimensionalScene_R1.java

The jar file provides all the information that we need to compile and

run the renderer's client program ThreeDimensionalScene_R1.java. Open

a command-line prompt in your jar-example folder. Compile the source

file with this classpath in the javac command-line.

jar-example> javac -cp renderer_1.jar ThreeDimensionalScene_R1.java

Then run the client program with this classpath in the java command-line.

jar-example> java -cp .;renderer_1.jar ThreeDimensionalScene_R1

Notice the slight difference in the classpath for the javac and java

commands. For javac, since we are specifying the source file on the

command-line, and all the needed class files are in the jar file, we

do not need the current directory in the classpath. But in the java

command, we need all the class files in the jar file AND we need the

one class file in the current director, so we need the current directory

in the classpath. One very subtle aspect of the java command is that the

name ThreeDimensionalScene_R1 is NOT the name of a file, it is the name

of a class, and that class needs to be in the classpath. Another way to

think about this is that javac commands needs the name of a Java source

FILE but the java command needs the name of a CLASS (not a class file!).

We can give the javac command the full path name or a (valid) relative

path name of a source file and it will find the file. But we must give

the java command the full package name of a class (not the full path

name of the file that holds the class, that will never work).

> javac -cp <...> Path_to_Java_source_file.java

> java -cp <...> Full_package_name_of_a_Java_class

3.6 Jar files and VS Code

The renderer_1.jar file can be used by the VS Code editor so that the IDE

can compile programs that use the renderer library (like your homework

assignments).

Do this experiment. Open another command-line prompt in the jar-example

folder that you created in the last section. Type this command to start

VS Code in the jar-example folder.

jar-example> code .

This command-line is read as "code here" or "code dot". This command tells the Windows operating system to start the VS Code editor in the current directory. This makes VS Code open the directory as a project.

Find the file ThreeDimensionalScene_R1.java in the left hand pane of

VS Code. After you open ThreeDimensionalScene_R1.java you will see that

it is filled with little red squiggly lines that mean that the classes

cannot be found. VS Code does not (yet) know how to find classes from the

renderer. But all those classes are in the jar file renderer_1.jar in the

folder with the file ThreeDimensionalScene_R1.java. But VS Code does not

(yet) know that it should use that jar file. We need to configure the

classpath that is used by VS Code. Near the bottom of VS Code's left pane

look for and open an item called "JAVA PROJECTS". In its "Navigation Bar"

click on the "..." item (labeled "More Actions...") and select

"Configure Classpath". Here is a picture.

https://code.visualstudio.com/assets/docs/java/java-project/projectmanager-overview.png

When the "Configure Classpath" window opens, click on the "Libraries" tab.

Click on "Add Library..." and select the renderer_1.jar file to add it

to the VS Code classpath.

After you add renderer_1.jar to VS Code's classpath, go back to the

ThreeDimensionalScene_R1.java file. All the little red squiggly lines

should be gone and you should be able to build and run the program.

The actions that you just took with the VS Code GUI had the effect of

creating a new subfolder and a new configuration file in the jar-example

folder. Open the jar-example folder and you should now see a new sub-folder

called .vscode that contains a new file called settings.json.

\---jar-example

| renderer_1.jar

| ThreeDimensionalScene_R1.java

|

\---.vscode

settings.json

The settings.json file holds the new classpath information for VS Code.

Here is what settings.json should look like.

{

"java.project.sourcePaths": [

"."

],

"java.project.referencedLibraries": [

"renderer_1.jar",

]

}

You can actually bypass the GUI configuration steps and just create this

folder and config file yourself. Many experienced VS Code users directly

edit their settings.json file, using, of course, VS Code. Try it. Use

VS Code to look for, and open, the settings.json file.

Now do another experiment. In VS Code, go back to the ThreeDimensionalScene_R1.java

file and hover your mouse, for several seconds, over the setColor() method

name in line 37. You should get what Microsoft calls an IntelliSense tool tip

giving you information about that method (taken from the method's Javadoc).

But the tool tips do not (yet) work for the renderer's classes. The VS Code

editor does not (yet) have the Javadoc information it needs about the

renderer's classes.

The build_jar_files.cmd script file created a second jar file called

renderer_1-sources.jar. This jar file holds all the source files from the

renderer project. This jar file can be used by VS Code to give you its

IntelliSense tool-tip information and code completion for all the renderer

classes.

Copy the file renderer_1-sources.jar from the renderer_1 folder to the

jar-example folder.

\---jar-example

| renderer_1-sources.jar

| renderer_1.jar

| ThreeDimensionalScene_R1.java

|

\---.vscode

settings.json

You may need to quit and restart VS Code, but VS Code should now be able to give you Javadoc tool tips when you hover your mouse (for several seconds) over any method from the renderer's classes.

NOTE: You usually do not need to explicitly add the renderer_1-sources.jar

file to the VS Code classpath. If you have added a jar file to VS Code, say

foo.jar, then VS Code is supposed to also automatically open a jar file

called foo-sources.jar if it is in the same folder as foo.jar.

FINAL NOTE: DO all the experiments mentioned in the last two sections. The experience of doing all these steps and having to figure out what you are doing wrong is far more valuable than you might think!

https://code.visualstudio.com/docs/java/java-project

https://code.visualstudio.com/docs/java/java-project#_configure-classpath-for-unmanaged-folders

If you are on the PNW campus, then you can download the following book about VS Code (you have permission to download the book, for free, while on campus because of the PNW library).

https://link.springer.com/book/10.1007/978-1-4842-9484-0

3.7 Build system summary

Every programming language needs to provide tools for working on large projects (sometimes referred to as "programming in the large").

A language should provide us with

- a system for organizing our code,

- a system for documenting our code,

- a system for building our code's artifacts,

- a system for distributing those artifacts.

For this Java renderer project we use

- classes and packages,

- Javadocs, Readmes,

- command-line scripts,

- jar files, zip files.

When you learn a new programming language, eventually you get to the stage where you need to learn the language's tools for supporting programming in the large. Learn to think in terms of how you would organize, document, build, and distribute a project.

https://en.wikipedia.org/wiki/Programming_in_the_large_and_programming_in_the_small

https://mitcommlab.mit.edu/broad/commkit/best-practices-for-coding-organization-and-documentation/

4. FrameBuffer data structure

The FrameBuffer class represents the output from our renderer.

We will consider FrameBuffer to be an abstract data type (ADT)

that has a public interface and a private implementation.

The public interface, also referred to as the class's API, defines how a

programmer works with the data type. What are its constructors and what

methods does it make available to the programmer? The public interface

to the FrameBuffer class is documented in its Javadocs. Be sure to build

and read the Javadocs for the framebuffer package.

The private implementation is the details of the class as defined in the

FrameBuffer.java source file. When you first learn about a new class,

you almost never need to know the details of its private implementation.

After you become comfortable working with the class's API, then you might

be interested in looking at its implementation. If you need to maintain or

modify a class, then you must become familiar with its implementation (its

source code).

https://en.wikipedia.org/wiki/Abstract_data_type

https://en.wikipedia.org/wiki/API

4.1 FrameBuffer interface

A FrameBuffer represents a two-dimensional array of pixel data that

can be displayed on a computer's screen as an image (a picture).

The public interface that the FrameBuffer class presents to its clients

is a two-dimensional array of colored pixels. A FrameBuffer constructor

has two parameters, widthFB and heightFB, that determine the dimensions

of the array of pixels (once a FrameBuffer is constructed, its dimensions

are immutable).

A FrameBuffer defines a two-dimensional coordinate system for its pixels.

The pixel coordinates are written like the (x, y) coordinates in a plane.

The pixel with coordinates (0, 0) is the pixel in the upper left-hand

corner. We call this pixel the "origin" of the coordinate system. The

x-coordinates increase to the right and the y-coordinates increase

downwards (there are no valid negative coordinates).

The FrameBuffer class has methods setPixelFB() and getPixelFB() for

setting and getting the color of any pixel in a FrameBuffer object. There

are also methods for storing a FrameBuffer object as an image file in the

computer's file system.

Here is a picture of a h-by-w FrameBuffer with h = 6 and w = 12.

Notice that the pixel in the upper left-hand corner of the FrameBuffer

has coordinates (0, 0) (the origin). The pixel with coordinates

(x, y) = (6, 2) is marked in the picture. That pixel is in the seventh

column and the third row (from the top). The pixel in the lower right-hand

corner has coordinates (x, y) = (w-1, h-1) = (11, 5).

0 1 2 3 4 5 6 7 8 9 10 11

+--+--+--+--+--+--+--+--+--+--+--+--+

0 | | | | | | | | | | | | |

+--+--+--+--+--+--+--+--+--+--+--+--+

1 | | | | | | | | | | | | |

+--+--+--+--+--+--+--+--+--+--+--+--+

2 | | | | | | |##| | | | | |

+--+--+--+--+--+--+--+--+--+--+--+--+

3 | | | | | | | | | | | | |

+--+--+--+--+--+--+--+--+--+--+--+--+

4 | | | | | | | | | | | | |

+--+--+--+--+--+--+--+--+--+--+--+--+

5 | | | | | | | | | | | | |

+--+--+--+--+--+--+--+--+--+--+--+--+

Each pixel in a FrameBuffer represents the color of a single "dot" in

a computer's display screen. Most computer displays have around 1,000

horizontal pixels and 1,000 vertical pixels. The "resolution" of computer

displays varies a lot. You should find out the resolution of the display

that you are currently looking at.

We are going to take the view that Color is another abstract data type

(with a public interface and a private implementation). The Color class

is defined for us by the Java class library (in the java.awt package).

A Color object has three components, the amount of red, green, and blue

that is mixed into the color represented by the Color object.

There is much more to the FrameBuffer interface. Build and read the

Javadocs for the FrameBuffer class so that you can see the whole interface

documented in one place. (Use the script file build_all_Javadocs.cmd

to create the html folder that holds the renderer's Javadocs. Use your

browser to open the file html/index.html and then navigate to the Javadoc

page for the FrameBuffer class.)

Then, even more importantly, read the example code in the

renderer_1/clients_r1

folder and the

framebuffer-viewport-pixel-examples

sub folder. Build and run the example programs. Try making simple changes to

the example programs. Come up with your own examples of things that you

can do with a FrameBuffer.

https://en.wikipedia.org/wiki/Framebuffer

https://en.wikipedia.org/wiki/Pixel

https://en.wikipedia.org/wiki/Display_resolution

https://docs.oracle.com/en/java/javase/21/docs/api/java.desktop/java/awt/Color.html

4.2 FrameBuffer implementation

When you use an abstract data type, you normally don't need to know the

details of its (private) implementation. But since our goal is to write

the implementation of a renderer, we need to determine the details of our

implementation of the FrameBuffer interface. Since a FrameBuffer appears

to its clients to be a two-dimensional array of colors, you might expect

the FrameBuffer class to be implemented as a two-dimensional array of

Color objects, Color[][]. But that would not be a good implementation.

We shall implement the FrameBuffer class as a one-dimensional array of

integers, int[]. This array is called the pixel_buffer.

Remember that a Color object has three components, the amount of red,

green, and blue that make up the color. The human eye can see several

hundred shades of each primary color, red, green, and blue. Since our

eyes see several hundred shades of red, it is convenient to use 8 bits

(256 distinct values) to represent shades of red. Similarly for shades

of green and blue. So we need 24 bits to represent a shade of color

(notice that there are 256^3 = 2^24 = 16,777,216 distinct color shades).

A Java int is 32 bits, so we can fit the three bytes of red, green, and

blue data into a single int (and have 8 bits left over for later use).

A Java int is much more compact (in the computer's memory) that a Java

Color object. That is one reason why our FrameBuffer implementation

will use an array of (primitive) int instead of the more obvious array

of Color objects.

If a FrameBuffer represents h rows by w columns of pixels, then the

FrameBuffer's pixel_buffer holds h * w integers. Our implementation of

the FameBuffer interface does NOT store its pixel data as a two-dimensional

h-by-w array of integers (nor is it stored as a three-dimensional h-by-w-by-3

array of bytes). Our implementation of the FrameBuffer interface will store

its pixel data as a one-dimensional h * w array of integers. This

one-dimensional array is the row major form of the two-dimensional data,

meaning that the first w integers in the one-dimensional array are the

pixels from the framebuffer's first row. The next w integers in the array

are the pixels from the framebuffer's second row, etc. The first w

integers (the first row of pixels) represent the top row of pixels when

the framebuffer's image is displayed on a computer's screen.

https://en.wikipedia.org/wiki/Row-_and_column-major_order

Here is a picture of a very small h-by-w FrameBuffer (with h = 4 and

w = 7) and its array-of-rows pixel_buffer below it. Four rows and

seven columns means there are 28 pixels.

0 1 2 3 4 5 6

+--+--+--+--+--+--+--+

0 | | | | | | | |

+--+--+--+--+--+--+--+

1 | | | | | | | |

+--+--+--+--+--+--+--+

2 | | | |##| | | |

+--+--+--+--+--+--+--+

3 | | | | | | | |

+--+--+--+--+--+--+--+

| row 0 | row 1 | row 2 | row 3 |

+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+

| | | | | | | | | | | | | | | | | |##| | | | | | | | | | |

+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+

0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

Let us see how we would translate a two-dimensional pixel address, (x, y),

into a one-dimensional index in the pixel_buffer. Consider the pixel at

(3, 2) (column 3 and row 2) which is marked in the above picture. In the

one-dimensional pixel_buffer array, this pixel needs to skip over two whole

rows (of 7 pixels each) and then skip over three more pixels. So the index

of this pixel is 2 * 7 + 3 = 17. In general, in a FrameBuffer with width w,

a pixel at address (x, y) needs to skip over y rows (of w pixels each)

and then skip over an additional x pixels, so the pixel has an index in the

one-dimensional pixle_buffer given by

index = (y * w) + x

This formula is used by both the setPixelFB() and getPixelFB()methods

in the FrameBuffer class.

To get a better idea of how the FrameBufferinterface is implemented, let us

look at a very bare bones, minimal implementation of the FrameBuffer class.

The following FrameBuffer definition allows us to instantiate a FrameBuffer

object, read and write pixel data into the object, and print a string

representation of the object. The string representation is formatted to show

the FrameBuffer as rows of r, g, b values. This works fine for small

framebuffers (less than 20 rows by 20 columns).

import java.awt.Color;

class FrameBuffer {

public final int width; // Instance variables.

public final int height;

public final int[] pixel_buffer;

public FrameBuffer(int width, int height) {

this.width = width;

this.height = height;

this.pixel_buffer = new int[this.width * this.height];

}

public void setPixelFB(int x, int y, Color c) {

pixel_buffer[(y * width) + x] = c.getRGB();

}

public Color getPixelFB(int x, int y) {

return new Color( pixel_buffer[(y * width) + x] );

}

@Override public String toString() {

String result = "FrameBuffer [w=" + width + ", h=" + height + "]\n";

for (int y = 0; y < width; ++y) { result += " r g b |"; }

result += "\n";

for (int y = 0; y < height; ++y) {

for (int x = 0; x < width; ++x) {

final int c = pixel_buffer[(y * width) + x];

final Color color = new Color(c);

result += String.format("%3d ", color.getRed())

+ String.format("%3d ", color.getGreen())

+ String.format("%3d|", color.getBlue());

}

result += "\n";

}

return result;

}

}

Notice that the setPixelFB() and getPixelFB() methods take two coordinate

parameters, an x and a y. The setPixelFB() method also takes a Color

parameter, and the getPixelFB() method returns a Color object. These

two methods represent the FrameBuffer as a 2-dimensional array of Color

objects (the public interface). But the data for the FrameBuffer is

stored in a 1-dimensional array of int (the private implementation).

The getPixelFB() and setPixelFB() methods do the translation from two

dimensions to one dimension, and the translation of a Color object to

an int value. (In other words, setPixelFB() translates from the public

interface to the private implementation, and getPixelFB() translates

from the private implementation to the public interface.)

Here is a sample program that creates a (small) FrameBuffer and draws

two diagonal lines in it. The program then prints the string representation

of the FrameBuffer object.

import java.awt.Color;

import java.util.Arrays;

public class TestFrameBuffer {

public static void main(String[] args) {

final FrameBuffer fb = new FrameBuffer(11, 11);

for (int y = 0; y < fb.height; ++y)

for (int x = 0; x < fb.width; ++x) {

fb.setPixelFB(x, x, Color.white);

fb.setPixelFB(fb.width - 1 - x, x, Color.white);

}

System.out.println( fb );

//System.out.println( Arrays.toString(fb.pixel_buffer) );

}

}

Here is what the string representation looks like after the two

diagonal lines are drawn in the FrameBuffer. Notice that this

string represents the FrameBuffer in a way that matches it public

interface (a two-dimensional array of colors). We could also print

out the pixel_buffer array from the FrameBuffer object (the

private implementation) but it is not very helpful.

FrameBuffer [w=11, h=11]

r g b | r g b | r g b | r g b | r g b | r g b | r g b | r g b | r g b | r g b | r g b |

255 255 255| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0|255 255 255|

0 0 0|255 255 255| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0|255 255 255| 0 0 0|

0 0 0| 0 0 0|255 255 255| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0|255 255 255| 0 0 0| 0 0 0|

0 0 0| 0 0 0| 0 0 0|255 255 255| 0 0 0| 0 0 0| 0 0 0|255 255 255| 0 0 0| 0 0 0| 0 0 0|

0 0 0| 0 0 0| 0 0 0| 0 0 0|255 255 255| 0 0 0|255 255 255| 0 0 0| 0 0 0| 0 0 0| 0 0 0|

0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0|255 255 255| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0|

0 0 0| 0 0 0| 0 0 0| 0 0 0|255 255 255| 0 0 0|255 255 255| 0 0 0| 0 0 0| 0 0 0| 0 0 0|

0 0 0| 0 0 0| 0 0 0|255 255 255| 0 0 0| 0 0 0| 0 0 0|255 255 255| 0 0 0| 0 0 0| 0 0 0|

0 0 0| 0 0 0|255 255 255| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0|255 255 255| 0 0 0| 0 0 0|

0 0 0|255 255 255| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0|255 255 255| 0 0 0|

255 255 255| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0| 0 0 0|255 255 255|

The last two class definitions, for FrameBuffer and TestFrameBuffer,

are compilable and runnable. Try running these examples and make sure

you get the output shown above. Also, try modifying and experimenting

with these examples.

4.3 FrameBuffer objects and PPM files

An instance of the FrameBuffer class is a data structure (a Java object).

It "lives" inside the computer's memory system (in the Java heap). We cannot

see a FrameBuffer object (but we can "visualize" it using its toString()

method). But the whole point of computer graphics is to see the 3D images the

renderer can create. So how do we actually see the image that a FrameBufffer

object represents?

We need to copy the data from a FrameBuffer object into an image file in

the computer's file system (that is, we need to copy data from the computer's

memory system to the computer's file system). When you take a picture with a

digital camera, the picture ends up as an image file in the camera's storage

device. You can "open" that image file and see the picture it represents

displayed on a screen. You can send that image file to someone else's

computer and then they can see the image displayed on their screen. Image

files are how we preserve the data from a FrameBuffer object.

An image file format is a specific way to store pixel data in a file. There are a large number of image file formats. Most of the well known ones (png, jpeg, heic) are more complicated than what we need. We will use a simple image file format that is popular with programmers who experiment with 3D renderers, the PPM file format.

A PPM file has two parts, the meta-data part and the pixel-data part. The meta-data part is data about the pixel-data part. Before we can explain the meta-data part, we need to explain the pixel-data part.

The pixel-data in a PPM file is a sequence of (binary) bytes, three bytes for every pixel in the image represented by the PPM file. If a PPM file represents an image that is 300 pixels wide and 200 pixels tall, then there are 60,000 pixels in the image and 180,000 bytes in the pixel-data part of the PPM file. The first three bytes in the pixel-data are the bytes for the first pixel in the image (the upper left-hand corner of the image). The three bytes for each pixel are in the order red, green, blue.

We just said that an image that is 300 pixels wide and 200 pixels tall will have 180,000 bytes of pixel-data. But suppose you are presented with 180,000 bytes of pixel-data. How wide is the image? How tall is the image? Maybe it is 300 pixels wide and 200 pixels tall, but maybe it is 200 pixels wide and 300 pixels tall. Or maybe the image represented by this pixel-data is 400 pixels wide and 150 pixels tall (or 150 pixels wide and 400 pixels tall). All of the following image dimensions have the same number of bytes of pixel-data.

- 300 by 200

- 200 by 300

- 100 by 600

- 400 by 150

- 800 by 75

The pixel-data in a PPM file is ambiguous. By itself it cannot tell us what the dimensions are of the image it represents. So we need more information about the pixel-data. That is the "meta data" (data about data). The first part of a PPM file, the meta-data part, tells us the dimensions of the image represented by the pixel-data.

A PPM file begins with three lines of ASCII text. The first line is called the "magic number" and it should contain the string "P6". The second line contains the dimensions of the image and should put the width first, followed by a space, and then the height. The third line should contain the string "255" to specify that we are using 255 values for each of red, green, and blue (i.e., one byte).

The meta-data (also called the "file header") for the PPM file of an image that is 300 pixels wide and 200 pixels tall would be,

P6

300 200

255

and then this meta-data would be immediately followed in the file by the 180,000 bytes of pixel-data.

One somewhat odd characteristic of a P6 PPM file is that it is both a "text file" and a "binary file". The first part of the PPM file, the meta-data, is ASCII text. But the second part of the PPM file, the pixel-data, is binary data. You should open a PPM file in a text editor and notice that the meta-data is clearly readable, but where the meta-data ends, the file becomes "unreadable".

The FrameBuffer class has methods for saving a FrameBuffer object as a PPM

file. The FrameBuffer class also has a constructor for creating a FrameBuffer

object initialized with the pixel data from a PPM file. See the FrameBuffer

Javadocs for details.

https://en.wikipedia.org/wiki/Netpbm#File_formats

https://en.wikipedia.org/wiki/Image_file_format

https://developer.mozilla.org/en-US/docs/Web/Media/Guides/Formats/Image_types

https://en.wikipedia.org/wiki/Metadata

5. Viewport data structure

The Viewport class represents a rectangular region of pixels in a

FrameBuffer. We will consider Viewport to be another abstract data

type with a public interface and a private implementation.

Be sure to build the Javadocs for the framebuffer package and look at

the Javadocs for the Viewport class (its public interface).

After you become familiar with the Viewport interface from the Javadocs

and the code examples, then you can look at the source code for the

Viewport class to learn the details of its implementation.

5.1 Viewport interface

The FrameBuffer class defines a nested Viewport class which represents

a rectangular sub-array of the pixel data in the FrameBuffer. A Viewport

is the active part of the FrameBuffer; it is the part of the FrameBuffer

that the renderer writes pixel data into.

The Viewport interface is similar to theFrameBuffer` interface.

A Viewport is determined by its width and height and the position of its

upper left-hand corner in the FrameBuffer. A Viewport constructor has

four parameters, widthVP, heightVP. ul_x and ul_y. In order that a

Viewport be completely contained in its Framebuffer, we should have

ul_x + widthVP < widthFB and

ul_y + heightVP < heightFB

where widthFB and heightFB are the width and the height of the

FrameBuffer.

Each Viewport has its own (x,y)-coordinate system for the pixels within

the Viewport. This coordinate system has its origin, (0,0), at the upper

left-hand corner of the Viewport. The x-coordinates increase to the right

and the y-coordinates increase downwards.

The Viewport class has methods setPixelVP() and getPixelVP()for setting

and getting the color of any pixel in a Viewport. There are also methods

for storing a Viewport as an image file in the computer's file system.

Here is an illustration of a FrameBuffer that has n rows by m columns

of pixels with a Viewport that has w rows and h columns. Notice how,

in this picture, the upper left-hand corner of the Viewport is labeled

(0,0). This is that pixel's coordinate in the Viewport's coordinate

system. In the coordinate system of the FrameBuffer that pixel has the

coordinate (x_ul, y_ul).

(0,0)

+-------------------------------------------+

| |

| |

| (0,0) |

| +------------+ |

| | | |

| | | |

| | | |

| | | |

| +------------+ |

| (w-1,h-1) |

| |

| |

| |

| |

+-------------------------------------------+

(m-1,n-1)

Quite often a Viewport will be the whole FrameBuffer. A Viewport

that is smaller than the whole FrameBuffer can be used to implement

special effects like "split screen" (two independent images in the

FrameBuffer), or "picture in a picture" (a smaller picture superimposed

on a larger picture). In future renderers (starting with renderer 5),

another use of a Viewport that is not the whole FrameBuffer is when we

want to display an image with an aspect ratio that is different than the

aspect ratio of the FrameBuffer.

https://en.wikipedia.org/wiki/Split_screen_(computer_graphics)

https://en.wikipedia.org/wiki/Picture-in-picture

5.2 Viewport implementation

The Viewport class is implemented as a non-static nested class

(also called an inner class) within the FrameBuffer class. Inner

classes are not always covered in introductory Java textbooks but

they are fairly common in the design of larger software systems.

https://docs.oracle.com/javase/tutorial/java/javaOO/nested.html

https://dev.java/learn/classes-objects/nested-classes/

https://www.baeldung.com/java-nested-classes

A nested class (inner class) is a class defined inside the definition

of some other class (the outer class). Here is a (very) brief outline

of the FrameBufer class and its inner Viewport class.

import java.awt.Color;

class FrameBuffer

{

final int widthFB; // Instance variables.

final int heightFB;

final int[] pixel_buffer;

public FrameBuffer(int widthFB, int heightFB)

{

this.widthFB = widthFB;

this.heightFB = heightFB;

this.pixel_buffer = new int[widthFB * heightFB];

}

public void setPixelFB(int x, int y, Color c)

{

pixel_buffer[(y * widthFB) + x] = c.getRGB();

}

public Color getPixelFB(int x, int y)

{

return new Color( pixel_buffer[(y * widthFB) + x] );

}

public class Viewport // Inner class.

{

final int vp_ul_x; // Instance variables for inner class.

final int vp_ul_y;

final int widthVP;

final int heightVP;

public Viewport(int ul_x, int ul_y, int widthVP, int heightVP)

{

this.vp_ul_x = ul_x;

this.vp_ul_y = ul_y;

this.widthVP = widthVP;

this.heightVP = heightVP;

}

public void setPixelVP(int x, int y, Color c)

{

setPixelFB(vp_ul_x + x, vp_ul_y + y, c);

}

public Color getPixelVP(int x, int y)

{

return getPixelFB(vp_ul_x + x, vp_ul_y + y);

}

}

}

A nested class is defined in a way that is similar to how methods are

defined. A method is nested within a class definition and a method has

access to all the fields and other methods defined in the class. The same

is true for a nested class; it has access to all the fields and methods

defined in its outer class. But this is a very subtle idea. In order that

a nested class have access to the instance fields of its outer class, the

nested class must be instantiated with respect to a specific instance of

the outer class. In other words, an instance of the inner class cannot

have access to the fields of every and any instance of the outer class.

It would only make sense for an instance of the inner class to have access

to the fields of a specific instance of the outer class. For example, here

is the code for instantiating a FrameBuffer object and an associated

Viewport object.

final FrameBuffer fb = new FrameBuffer(100, 100);

final FrameBuffer.Viewport vp = fb.new Viewport(20, 20, 50, 50);

The FrameBuffer.Viewport notation is because the ViewPort class is a member

class of the FrameBuffer class. The fb.new notation is what specifies that

the new instance of the Viewport class must be tied to the fb instance of

FrameBuffer.

Notice that there is no pixel-array in the definition of the Viewport

class. A Viewport object does not store any pixel data. Instead of pixel

data, a Viewport object has a (hidden) reference to its FrameBuffer object.

The Viewport object vp has access to all the fields and methods of the

FrameBuufer object fb (using vp's hidden reference to fb). In particular,

a Viewport has access to the pixel_buffer of the FrameBuffer. A Viewport

object gets all of its pixel data from the FrameBuffer object it is tied

to. When you access a pixel within a Viewport object, using either the

getPixelVP() or setPixleVP() methods, you are really accessing a pixel

in the FrameBuffer object that owns that Viewport object.

Here is an illustration of a FrameBuffer containing two Viewports and

another FrameBuffer containing a single Viewport.

fb1

+------------------------------------------+

| | fb2

| +------------+ | +-----------------------------+

| | | | | |

| | | | | +------------+ |

| | | | | | | |

| | | | | | | |

| +------------+ | | | | |

| | | | | |

| +------------+ | | +------------+ |

| | | | | |

| | | | | |

| | | | +-----------------------------+

| | | |

| +------------+ |

+------------------------------------------+

Here is code that can instantiates these five objects.

final FrameBuffer fb1 = new FrameBuffer(300, 250);

final FrameBuffer fb2 = new FrameBuffer(200, 150);

final FrameBuffer.Viewport vp1 = fb1.new Viewport( 30, 30, 100, 100);

final FrameBuffer.Viewport vp2 = fb1.new Viewport(150, 150, 75, 75);

final FrameBuffer.Viewport vp3 = fb2.new Viewport( 30, 30, 80, 80);

Remember that the fb1.new and fb2.new notation reminds us that each

instance of the Viewport class must be tied to a specific instance

of the FrameBuffer class.

After executing these five lines of code, the Java heap will contain five

objects, two FrameBuffer objects and three ViewPort objects. Each

Viewport object is "tied" to a specific FrameBuffer object (using a

hidden reference variable in the Viewport object). A Viewport does

not itself store any pixel data. Each Viewport object references its

FrameBuffer object to access the pixels that are represented by the

Viewport.

Let us look more carefully at an example of a FrameBuffer containing a

Viewport. Here is code that instantiates a (small) FrameBuffer that

has 5 rows and 8 columns of pixels with a (even smaller) Viewport that

has 3 rows and 4 columns, and with the Viewport's upper left-hand corner

at pixel (2, 1) in the FrameBuffer.

final FrameBuffer fb = new FrameBuffer(8, 5);

final FrameBuffer.Viewport vp = fb.new Viewport(2, 1, 4, 3);

Here is a representation of this FrameBuffer and its Viewport.

0 1 2 3 4 5 6 7

+--+--+--+--+--+--+--+--+

0 | | | | | | | | |

+--+--+--+--+--+--+--+--+

1 | | |##|##|##|##| | |

+--+--+--+--+--+--+--+--+

2 | | |##|##|##|##| | |

+--+--+--+--+--+--+--+--+

3 | | |##|##|##|##| | |

+--+--+--+--+--+--+--+--+

4 | | | | | | | | |

+--+--+--+--+--+--+--+--+

Here is how the rows of the Viewport are positioned within the

FrameBuffer's one-dimensional array-of-rows pixel_buffer. Notice

that the Viewport's three rows are NOT contiguous within the

pixel_buffer.

| row 0 | row 1 | row 2 | row 3 | row 4 |

+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+

| | | | | | | | | | |##|##|##|##| | | | |##|##|##|##| | | | |##|##|##|##| | | | | | | | | | |

+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+

0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39

Given a Viewport pixel with coordinates (i, j)``, we know that itsFramebuffercoordinates are(x_ul + i, y_ul + j). From thoseFrameBuffercoordinates we

know that the(i, j)pixel from theViewporthas the followingindexin theFrameBuffer'spixel_buffer`.

index = (y_ul + j) * w + (x_ul + i)

For example, consider pixel (2, 1) in the Viewport.

0 1 2 3 4 5 6 7

+--+--+--+--+--+--+--+--+

0 | | | | | | | | |

+--+--+--+--+--+--+--+--+

1 | | |##|##|##|##| | |

+--+--+--+--+--+--+--+--+

2 | | |##|##|@@|##| | |

+--+--+--+--+--+--+--+--+

3 | | |##|##|##|##| | |

+--+--+--+--+--+--+--+--+

4 | | | | | | | | |

+--+--+--+--+--+--+--+--+

It is pixel (x_ul + i, y_ul + j) = (2 + 2, 1 + 1) = (4, 2) in the

FrameBuffer. That FrameBuffer pixel has the following index in the

pixel_buffer.

index = (y_ul + j) * w + (x_ul + i) = (1 + 1) * 8 + (2 + 2) = 20

| row 0 | row 1 | row 2 | row 3 | row 4 |

+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+

| | | | | | | | | | |##|##|##|##| | | | |##|##|@@|##| | | | |##|##|##|##| | | | | | | | | | |

+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+--+

0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39

5.3 Clearing a FrameBuffer or Viewport

When a FrameBuffer is created, how should its pixel data be initialized?

One possibility is to leave the FrameBuffer's pixel_buffer filled with

whatever Java's array constructor puts in it. A better strategy is to

have specific rules about initializing FrameBuffer objects.

Every FrameBuffer object has a "background color" field. If you do not

specify a FrameBuffer's background color when constructing the FrameBuffer,

the color defaults to black. When a FrameBuffer object is constructed, all

of its pixel data is initialized to the FrameBuffer's background color.

The FrameBuffer class has a clearFB() method that resets all the pixel

data in the FrameBuffer object to the background color. This "erases"

the FrameBuffer.

Every Viewport object also has a "background color" but the rules for

a Viewport are a bit different. When a Viewport is created, its

pixels are not set to its background color. The idea is that creating a

Viewport should not destroy (erase) the pixel data it represents in

the FrameBuffer.

The Viewport class has a clearVP() method that resets all the pixels

represented by the Viewport object to the background color of the

Viewport'. This erases theViewport, which will also erase the part

of theFrameBufferrepresent by theViewport`.

Here are a few rules that summarize the interactions between the FrameBuffer

background color and the background color for any Viewport.

- The default background color for a

FrameBufferis black. - When a new

FrameBufferis created, it is cleared with its background color. - Resetting the background color of a

FrameBuffer' does not clear theFrameBuffer. - The default background color for a

Viewportis the background color of its parentFrameBuffer. - When a new

Viewportis created, it is NOT cleared (so you can copy, or modify, whatever pixels are in that region of theFrameBuffer). - Resetting the background color of a

Viewportdoes not clear theViewport. - Resetting background color of a

FrameBufferdoes not reset the background color of anyViewport, not even the defaultViewport. - Clearing a

FrameBufferwill also clear all itsViewports to the background color of theFrameBuffer.

If you want to see more examples of code that uses the FrameBuffer and

Viewport classes, look at the code in the follow zip file.

http://cs.pnw.edu/~rlkraft/cs45500/for-class/framebuffer-viewport-pixel-examples.zip

6. Scene data structure

A Scene data structure represents a collection of geometric shapes

positioned in three dimensional space.

Here are the data field declarations from the Scene class.

public final class Scene

{

public final Camera camera;

public final List<Position> positionList;

public final String name;

}

A Scene object holds a reference to a Camera object and a reference

to a List of Position objects. A Scene object also holds a reference

to a String that gives the scene a "name". This name is mainly for debugging

and documentation purposes.

Each Position object holds a Model object (which represents a geometric

shape, see below) and a Vector object (which represents a location in

3D camera space). Each Model is positioned, by its Vector, in front of

the Camera which is located at the origin and looks down the negative

z-axis. Each Model object in a Scene object represents a distinct

geometric shape in the scene. A Model object is a List of Vertex

objects and a List of Primitive objects. A Primitive is either a

LineSegment or a Point. Each LineSegment object refers to two of

the Model's Vertex objects. The Vertex objects represent points in

the model's own coordinate system. The model's line segments represent

the geometric object as a "wire-frame", that is, the geometric object

is drawn as a collection of "edges". This is a fairly simplistic way

of doing 3D graphics and we will improve this in later renderers.

https://en.wikipedia.org/wiki/Wire-frame_model

https://www.google.com/search?q=3d+graphics+wireframe&tbm=isch

7. Camera

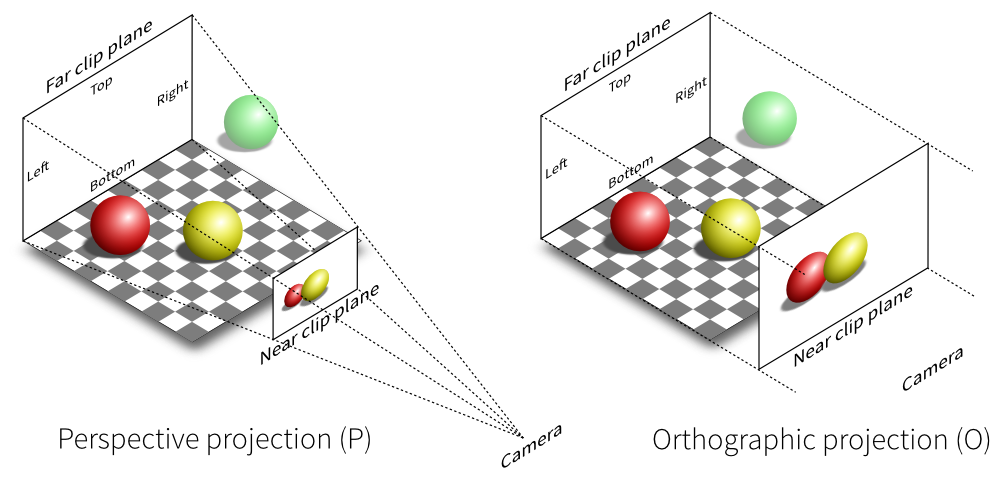

A Camera object represents a camera located at the origin of 3-dimensional

space looking down the negative z-axis. The 3-dimensional space looks like

this.

y-axis

|

2 + -z-axis

| /

| /

| -2 +

| | /

| | / This 3D space is called "camera space".

| | /